Topology: It's a general term, which describes the network structure. It refers to nodes, networks, communication interfaces, adapters...

Logical network interface: A port of a physical adapter represented in AIX (as a L3 device, for example, en0)

IP label: The label that is associated with an IP address as defined by the name resolution method (DNS or /etc/hosts).

IP alias: An AIX feature, when an IP address is added to an interface, rather than replacing its base IP address.

Boot IP (Base IP): The default IP that is configured on an interface by AIX on startup; the base address of the interface.

Service IP: PowerHA keeps this IP highly available (over which a service is provided). It's a resource, not part of the network topology.

Persistent IP: It is an IP alias that is assigned permanently to a node. It always stays on the same node.

Boot interface (Base interface): Any interface that can host a service IP. (in PowerHA7.1 only boot interface exists, in earlier versions: boot and standby interfaces)

A physical network connects two or more physical network interfaces. These interfaces (which are usually on one non-routed physical network or VLAN) can be grouped together to form a logical network. Logical networks are known by a unique name in PowerHA (for example net_ether_01). A logical network can be viewed as the group of interfaces used by PowerHA to host service IP labels.

During cluster configuration, the discovery process uses /etc/hosts file and interfaces on the node to create files in /usr/es/sbin/cluster/etc/config:

clip_config: contains details of the discovered interfaces; used in F4 SMIT lists.

clvg_config: contains details of each physical volume (PVID, VG name,status, major number etc.) and a list of free major numbers.

Important:

All the communication interfaces that are configured in the same PowerHA network must have the same subnet mask. Interfaces that belong to a different network can have either the same or different network mask.

--------------------------

Persistent Node IP:

A persistent node IP is an IP alias that can be assigned permanently to a node. It always stays on the same node (is node-bound) and it can coexist with other IPs. It Is not part of any resource group and this address can be used for administrative purposes because it always points to a specific node regardless of whether PowerHA is running.

The persistent IP labels are defined in the PowerHA configuration, and they become available when the cluster definition is synchronized. When PowerHA starts, it checks whether the alias is available. If it is not, PowerHA configures it on an available adapter and it remains available even if PowerHA is stopped. If the interface with the persistent IP fails while PowerHA is running, the persistent IP is moved to another interface on the same node. If the node fails the persistent IP label will no longer be available.

In a multiple interface per network configuration, using a persistent alias in the same subnet as your default route is common. This typically means that the persistent address is included in the same subnet as the service addresses.

Default gateway (route) considerations

If you link your default route to one of the base address subnets and that adapter fails, your default route will be lost. To prevent this situation, be sure to use a persistent address and link the default route to this subnet. The persistent address will be active while the node is active and therefore so will the default route. If you choose not to do this, then you must create a post-event script to reestablish the default route if this becomes an issue.

Not all adapters must contain addresses that are routable outside the VLAN. Only the service and persistent addresses must be routable. The base adapter addresses and any aliases used for heartbeating do not need to be routed outside the VLAN because they are not known to the client side.

--------------------------

Service IP

Service IP is used for accessing applications or nodes. Service IP is monitored by PowerHA and is part of a resource group. There are 2 types of it:

Shared service IP: It can be configured on multiple nodes and is part of a resource group (this one is used most of the time).

Node-bound service IP: It can be configured on only one node (is not shared by multiple nodes), it used with concurrent resource groups.

Firstalias

PowerHA 7.1 automatically configures the service IP with the firstalias option. Firstalias means, that service IP will be the first one among the aliases on an interface. For example when PowerHA is down, we have only a boot IP on an interface. When PowerHA starts up it adds the service IP as an alias, but not as the 2nd IP, it will be the first one on the list. (netstat output will show service IP first and boot IP as the next one, below that.) The reason behind this is routing. When we ping an IP, which resides on an adapter with multiple aliases on the same network, the first one will respond. It does not matter that we wanted to ping the 2nd alias, by default the first one will send reply (this can cause problems in firewalls because the reply comes from the 1st one on the list, not the 2nd). To avoid this issue, service addres is by default the first one, however firstalias can be disabled if needed.

When multiple service IP addresses are used, PowerHA analyzes the total number of aliases, and assigns each service address to the least loaded interface. PowerHA allows you to define a distribution preference, but PowerHA will always keep service IP active, even if the preference cannot be satisfied:

- Collocation, Anti-collocation: With collocation setting, if we have more service IPs, they will be allocated on the same boot interface. With Anti-collocation setting they will be distributed across all boot interfaces on the network.

- With source: If we have more service IPs, with this preference, we can choose one service label as a source for outgoing communication.

--------------------------

IP address takeover (IPAT) mechanisms

1. IP replacement (PowerHA versions 5.x, 6.x)

2. IP aliasing (PowerHA versions 5.x, 6.x, 7.x)

PowerHA v7.1 (and later versions) supports IP address takeover (IPAT) only through aliasing.

IPAT via IP replacement (old not available anymore!):

The service IP replaces the existing address on the interface, thus only one service IP can be configured on one interface at one time. The service IP should be on the same subnet as one of the boot IP addresses. Other interfaces on this node cannot be in the same subnet, and they are called as standby interfaces. These standby interfaces are used if the boot interface fails. IPAT via IP replacement can save subnets, but requires extra hardware.

IPAT via aliasing (since PowerHA 7.1 this exist only)

The service IP is aliased (using the ifconfig command) onto the interface without removing the underlying boot IP address. This means that more than one service IP label can coexist on one interface. Each boot interface on a node must be on a different subnet. By removing the need for one interface per service IP, IPAT through aliasing is more flexible, it also reduces fallover time, as it is much faster to add an alias to an interface, than removing the base IP address and then apply the service IP address.

--------------------------

Rules regarding IP configurations:

General rules:

– When multiple boot IPs are used (multiple adapters), each must be on a separate subnet to allow heartbeating

- Boot IPs don't not have to be routable outside of the cluster (they can be, just not necessary, persistent and service IPs should be routed)

- All interfaces in the same network must have same subnet mask. (Interfaces on other networks can have another subnet mask.)

- The subnet mask of the boot IP will be used for all IP aliases configured on the same network interface.

Service IP rules (with IPAT through aliases:

- When single adapter is used, service IP can be on the same subnet as the boot adapter

- When multiple adapters are used, service IP must be on separate subnet from the boot IP

- When multiple service IPs are used, these can be on the same subnet (or in different subnets)

Persistent IP rules:

- Persistent IP must be an alias, not the base address of an adapter

- Persistent IP can be in the same or different subnet as the service IP

- In multiple adapter networks the persistent IP must be on a different subnet from each of the boot interface subnets

(In single adapter network configuration there are no restrictions in this case a persistent IP might not needed at all.)

Not all addresses must be routable outside the VLAN. Only the service and persistent addresses must be routable. The base adapter addresses and any aliases used for heartbeating do not need to be routed outside the VLAN because they are not known to the client side.

During failover to another interface (on the same node) it can happen, that the service IP address is active on both the failed Interface and on the takeover interface. This is needed to preserve routing (routes will not disappear from routing table), but this might cause a DUPLICATE IP ADDRESS error log entry, which you can ignore.

IPAT through aliasing is supported only on networks that support the gratuitous ARP function of AIX. Gratuitous ARP is when a host sends out an ARP packet before using an IP address and the ARP packet contains a request for this IP. In addition to confirming that no other host is configured with this address, it ensures that the ARP cache on each machine (on the subnet) is updated with this new address.

To ensure that cluster events complete successfully and quickly, PowerHA disables NIS or DNS host name resolution during service IP label swapping by setting the NSORDER AIX environment variable to local. Therefore, the /etc/hosts file should be complete and must contain all PowerHA defined IP labels for all cluster nodes. After the swap completes, DNS access is restored.

If the interface holding the service IP address fails, PowerHA moves the service IP address on another available interface on the same node and on the same network; in this case, the resource group is not affected. If there is no available interface on the same node, the resource group is moved to another node with an available interface on the same logical network.

-------------------------------

PowerHA in virtualized environments

http://www-03.ibm.com/support/techdocs/atsmastr.nsf/WebIndex/TD105185

Most new configurations today are virtualized using VIOS. It is also common practice to provide adapter redundancy through the VIOS (Etherchannel, SEA...), which leads to a "single adapter configuration" in PowerHA. Single adapter configuration allows the boot IP and service IP to be on the same subnet. And the boot IP can serve the administrative functions, so it is not really necessary to configure a persistent IP address. (However it is possible to configure a persistent IP on the same subnet.)

In a VIOS configuration, it is still recommended to configure the netmon.cf file utilizing the new format specifically for VIOS environments.

-------------------------------

Multicast vs Unicast

Starting with version 7.1 PowerHA uses a new redesigned cluster health management called Cluster Aware AIX (CAA). CAA uses kernel-level code to exchange heartbeats over network, SAN fabric and also using the repository disk. By default, PowerHA SystemMirror uses unicast communications for heartbeat. As an alternative, multicast communications may be configured instead of unicast. For multicast, you can optionally select a multicast address, or let Cluster Aware AIX (CAA) automatically assign one.

If multicast is selected during the initial cluster configuration, an important factor is that the multicast traffic be able to flow between the cluster hosts in the data center. Multicasting is a form of addressing, where a group of hosts forms a group and exchanges messages. A multicast message sent by one in the group is received by all in the group. This allows for efficient cluster communication where many times messages need to be sent to all nodes in the cluster. For example, a cluster member need to notify the remaining nodes about a critical event and can accomplish the same by sending a single multicast packet. To achieve this, multicast must be enabled on the switches and with any forwarding, if applicable.

CAA creates a default multicast address if one is not specified during cluster creation. This default multicast address is formed by combining (using OR) 228.0.0.0 with the lower 24 bits of the IP address of the host. As an example, in our case the host IP address is 192.168.100.51, so the default multicast address is 228.168.100.51.

Multicast communication can be tested with mping:

1. mping -v -r -c 5 <--start mping on one node in receiver mode (-r) (-v: verbose, -c:number of pings)

2. mping -v -s -c 5 <--start mping on other node in sender mode (it will choose default ip, with -a you can specify ip)

(If mping fails, network admin needs to review switches)

PowerHA v7.1.0 through v7.1.2 required the use of multicast, but since PowerHA v7.1.3 by default (if no multicast IP address is provided while deploying the cluster) unicast is used (normal TCP/IP socket communication). Unicast requires no extra configuration.

Using multicast may have a benefit in a multi node (3,4,5...) cluster, but there is no benefit in a 2 node cluster setup and it needs additional configuration at PowerHA and at switch side. (My personal recommendation is using unicast in a 2 node cluster.)

Changing cluster config from Multicast to Unicast:

1. lscluster -c <--check Communication mode

2. smitty cm_define_repos_ip_addr <--change heartbeat mechanism from mulitcast to unicast

3. verify and sync <--verify and synchronize cluster

4. lscluster -c <--check again Communication mode

-------------------------------

netmon.cf

In PowerHA heartbeats are used to monitor an adapter’s state over a long period of time. When heartbeating is not working, a decision must be made about whether the local adapter has gone bad or there are other network problems. This decision is made based on whether any network traffic can be seen on the local adapter (using the inbound byte count of the interface). Where VIO is involved, this test becomes unreliable because there is no way to distinguish whether inbound traffic came in from the outside world through VIOS, or from a virtual I/O client (LPAR) in the same box.

A new netmon.cf function was added to support PowerHA in a virtual I/O environment, where PowerHA could not detect a local adapter-down event. The new format helps to decide, that adapter is up only, if it can ping a specified target.

The netmon.cf file must be placed in the /usr/es/sbin/cluster directory on all cluster nodes. Up to 32 targets can be provided for each interface. If any specific target is pingable, the adapter will be considered “up.” (The traditional format of the netmon.cf file is not valid in PowerHA v7, and later, and is ignored. Only the !REQD lines are used.)

example content of /usr/es/sbin/clister/netmon.cf:

!REQD en2 100.12.7.9

!REQD en2 100.12.7.10

Interface en2 is considered “up” only if it can ping either 100.12.7.9 or 100.12.7.10.

There is a general consensus against PowerHA nodes using the same VIOS, because this can mean that heartbeats can be passed between the nodes without packets leaving the box. The netmon.cf will be effective only, if targets are outside the VIO environment. Cluster verification cannot verify this. The order from one line to the other is unimportant. If more than 32 !REQD lines are specified for the same owner, any extra lines are ignored. This format does not change heartbeating behavior in any way. It changes only how the decision is made regarding whether a local adapter is up or down.

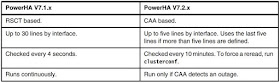

In PowerHA V7.2, the netmon.cf file is now used by CAA. Before PowerHA V7.2, it was used by Reliable Scalable Cluster Technology (RSCT). There are now (starting with PowerHA V7.2) different rules for the netmon.cf:

Independent from the PowerHA version that is used, if possible, you must have more than one address defined (by interface). It is not recommended to use the gateway address as modern gateways start dropping ICMP packages if there is high workload.

------------------------------------------------------

poll_uplink

Another solution to detect link status in VIO environments without using netmon.cf file, is the poll_uplink parameter. Using SEA poll_uplink method (since VIOS 2.2.3.3), SEA can pass up the link status, so no netmon.cf file with "!REQD" style ping is required any more.

In PowerHA V7, the network down detection is performed by CAA. CAA by default checks for IP traffic and for the link status of an interface. Therefore, using poll_uplink is advised for PowerHA LPARs. The network down failure detection is much faster if poll_uplink is used.

No special configuration required on the VIOS/SEA side, and on the PowerHA nodes (VIO clients) these steps are needed:

1. smitty clstop <--stop cluster

2. chdev -l <entX> -a poll_uplink=yes -P <--enable on all virt. interface on all nodes (with -P reboot is needed)

3. entstat -d <entX> | grep -i bridge <--after enabling, can be checked with entstat, it should show: Bridge Status: Up

4. verif. and synch <--run verif. and synch.

5. smitty clstart <--start cluster

There are no additional changes to PowerHA and CAA needed. The information about the virtual link status is automatically detected by CAA. There is no need to change the MONITOR_INTERFACE setting.

(In PowerHA V7.1.3, MONITOR_INTERFACE setting has to be changed, but in PowerHA V7.2.0 and later it is not needed anymore.)

checking the status of MONITOR_INTERFACE:

# clmgr -a MONITOR_INTERFACE query cluster

The default is MONITOR_INTERFACE=enable

------------------------------------------------------

cldump, clstat

Cldump and clstat can be used to have a good overview of the cluster status. Both of these utilities are using the clinfoES daemon and clinfo uses SNMP to gather information from the cluster manager.

clhosts file

This file contains IP address information which helps to enable communication between monitoring daemons on clients and the PowerHA cluster nodes. The file resides on all PowerHA cluster servers and clients in the /usr/es/sbin/cluster/etc/ directory.

When a monitor daemon starts up (for example clinfoES on a client), it reads this file to know which nodes are available for communication.

(when running clstat utility from a client, the clinfoES obtains info from this file.)

PowerHA automatically generates the clhosts file needed by clients when you perform a verification with the automatic corrective action feature enabled. The verification creates a /usr/es/sbin/cluster/etc/clhosts.client file on all cluster nodes, which is similar to this

# Date Created: 05/19/2014 at 14:39:07

#

10.10.10.52 #ftwserv

10.10.10.51 #dallasserv

192.168.150.51 #jessica_xd

192.168.150.52 #cassidy_xd

...

This file contains all addresses, including the boot, service, and persistent IPs. Before using any of the monitor utilities from a client node, the clhosts.client file must be copied over to all clients as /usr/es/sbin/cluster/etc/clhosts. (without the client extension)

Link to solve clstat issues:

https://www.ibm.com/support/pages/troubleshooting-clstat-command-issues

https://www.ibm.com/support/pages/common-issues-causing-clstat-malfunction

------------------------------------------------------

ERROR: Comm error found on node: aix1.

ERROR: Comm error found on node: aix2.

ERROR: No more alive nodes leftCluster services will not start on node(s): aix1 aix2

Please see the above verification errors for more detail.A trace of the verification warning/error messages above

is available in the file:/var/hacmp/clverify/clverify.log

The solution was, that default gateway was missing so nodes could not communicate at all. After creating default gateway, and ping worked between nodes, this error disappeared.

------------------------------------------------------

No comments:

Post a Comment